NVIDIA's Answer: Bringing GPUs to More Than CNNs - Intel's Xeon Cascade Lake vs. NVIDIA Turing: An Analysis in AI

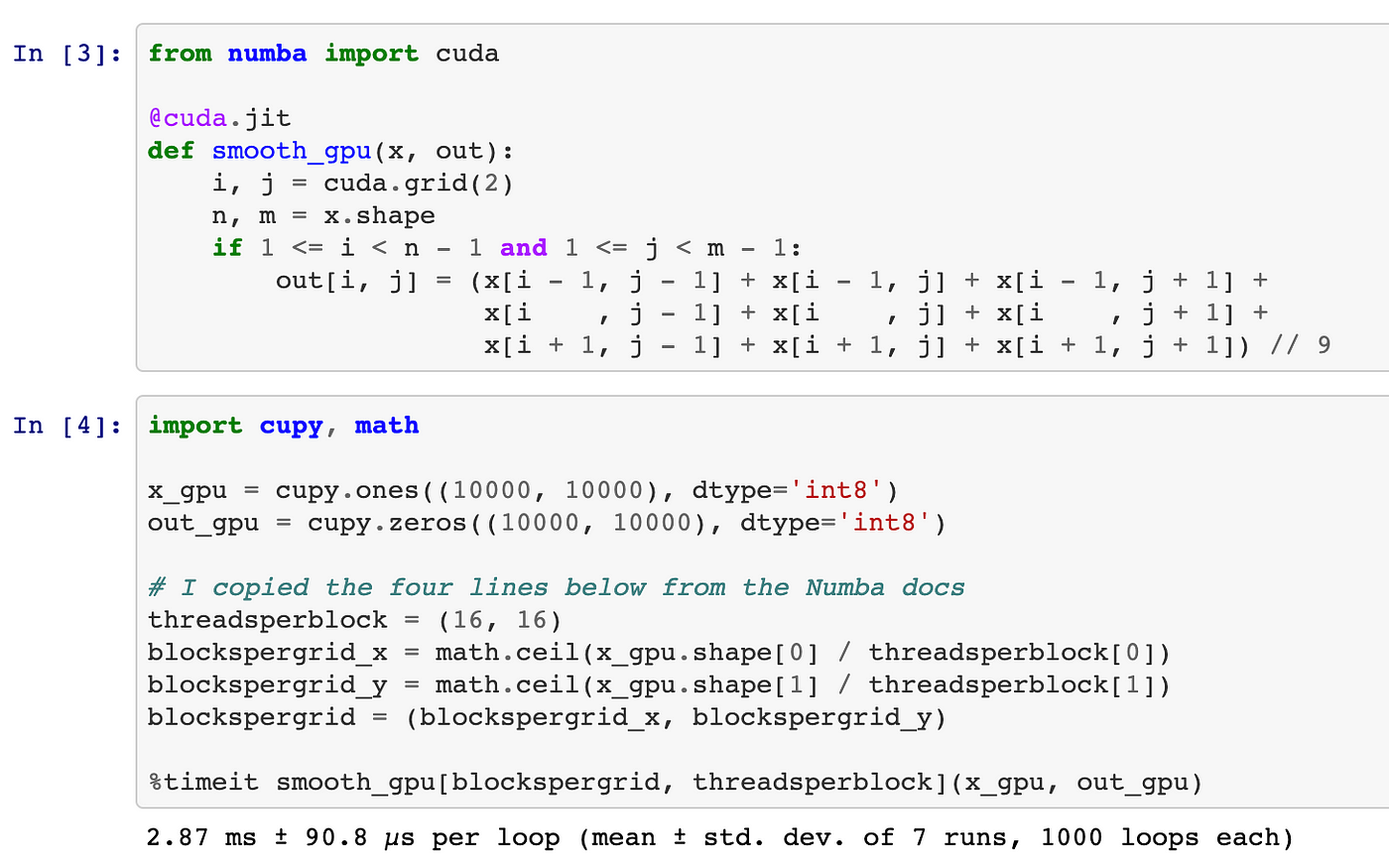

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Hands-On GPU Programming with Python and CUDA: Explore high-performance parallel computing with CUDA: Tuomanen, Dr. Brian: 9781788993913: Books - Amazon

![D] Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple : r/MachineLearning D] Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple : r/MachineLearning](https://external-preview.redd.it/7lLQAM6QKl67UsD5ElJ1PF7GwjblZnPcXgdqHv64b_A.jpg?width=640&crop=smart&auto=webp&s=03b8c9244d7278f8daf9813ac72d3a76008021bd)

D] Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple : r/MachineLearning

Ki-Hwan Kim - GPU Acceleration of a Global Atmospheric Model using Python based Multi-platform - YouTube